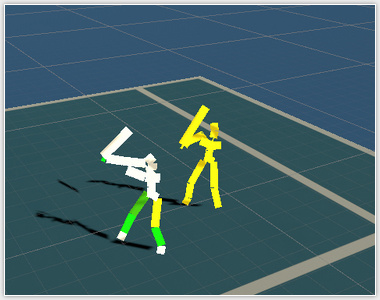

Virtual Reality Tennis Trainer

This research project focuses on 3D motion analysis and motion learning methodologies. We design novel methods for automated analysis of human motion by machine learning. These methods can be applicable in real training scenario or in VR training setup. The results of our motion analysis can help players better understand the errors in their motion and lead to improvement of motion performance. Our motion analysis methods are based on professional knowledge from tennis experts from our partner company VR Motion Learning GmbH & Co KG. We use numerous motion features, including rotations, positions, velocities and others, to analyze the motion.

Our goal is to use virtual reality as scenario for learning correct tennis technique that will be applicable in real tennis game. For this purpose, we plan to join our motion analysis with error visualization techniques in 3D and with novel motion learning methodologies. These methodologies may lead to learning correct sport technique, improvement of performance and prevention of injuries.

Virtual Architect

In this project we developed novel methods for automated geometry creation from the floorplan of a building and automatic interior design by furniture and material placement. Moreover, we investigated methods for immersive VR exploration and intuitive interaction. The methods investigated in this project enable the automated generation of VR walkthroughs from the floorplan of a building with minimal user intervention within only a few minutes. Additionally, the developed methods enable a reconfiguration of interior design in real-time. We implemented our methods in Unreal Engine 4 which enables the generated virtual walkthroughs to feature high-quality rendering, dynamic lighting (for variable daytime simulation), real walking, and advanced interaction to provide immersive and outstanding user experience. This project also addresses open topics in state-of-the-art virtual exploration and interaction with generated 3D environments.

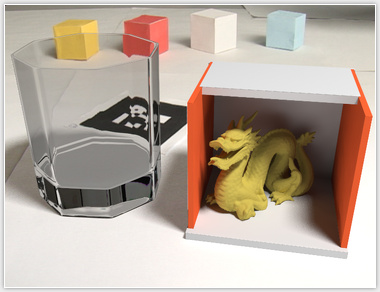

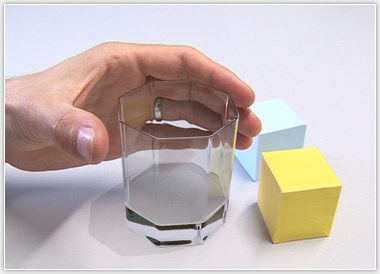

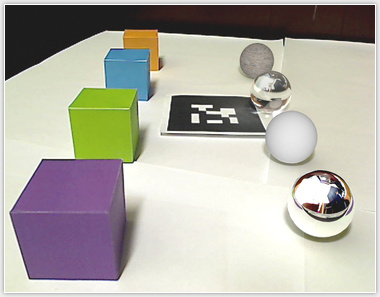

High-Quality Real-Time Global Illumination in AR

This PhD thesis presents novel physically based rendering and compositing algorithms for augmented reality. The developed algorithms calculate the two solutions of global illumination, required for rendering in AR, in one pass by using a novel one-pass differential rendering algorithm. The presented algorithms are based on GPU ray tracing which provides high quality results. The developed rendering system computes various visual effects including depth of field, shadows, specular and diffuse global illumination, reflections, and refractions. The thesis can be downloaded in PDF.

Differential Irradiance Caching

Fast and realistic synthesis of real videos with computer generated content has been a challenging problem in computer graphics. It involves computationally expensive light transport calculations. In this research we present a novel and efficient algorithm for diffuse light transport calculation between virtual and real worlds called Differential Irradiance Caching. It produces a high-quality result while preserving interactivity and allowing dynamic geometry, materials, lighting, and camera movement.

The problem of expensive differential irradiance evaluation is solved by exploiting the spatial coherence in indirect illumination using irradiance caching.

High-quality specular light transport in AR

A novel high-quality rendering system for Augmented Reality (AR) was designed and created in this research. We studied ray-tracing based rendering techniques in AR with the goal of achieving real-time performance and improving visual quality as well as visual coherence between real and virtual objects in a final composited image. A number of realistic and physically correct rendering effects are demonstrated, that have not been presented in real-time AR environments before. Examples are high-quality specular effects such as caustics, refraction, reflection, together with a depth of field effect and anti-aliasing.

Differential Progressive Path Tracing

In this research we present a novel method for real-time high quality previsualization and cinematic relighting. The physically based Path Tracing algorithm is used within an Augmented Reality setup to preview high-quality light transport. A novel differential version of progressive path tracing is proposed, which calculates two global light transport solutions that are required for differential rendering.

A real-time previsualization framework is presented, which renders the solution with a low number of samples during interaction and allows for progressive quality improvement. If a user requests the high-quality solution of a certain view, the tracking is stopped and the algorithm progressively converges to an accurate solution.

Physically-based depth of field in augmented reality

In this research work a novel method for rendering and compositing video in augmented reality is presented. The aim of the research is calculating the physically correct result of the depth of field caused by a lens with finite sized aperture. In order to correctly simulate light transport, ray-tracing is used and in a single pass combined with differential rendering to compose the final augmented video.

The image is fully rendered on GPUs, therefore an augmented video can be produced at interactive frame rates in high quality. Proposed method runs on the fly and no video postprocessing is needed.

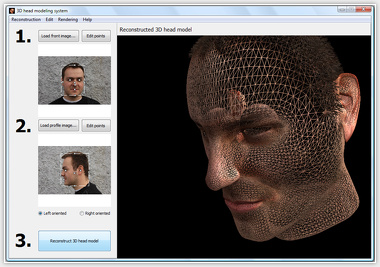

Automatic image-based 3D head modeling

3D Head Modeling System is software for automatic 3D head model creation from front and profile photograph developed in this work. After loading input images, the facial features are automatically detected. User can manually adjust detected positions to reach better precision. Finally the 3D head model is reconstructed from input images.

3D head reconstruction can be done in three basic steps. In the first two user loads the front and profile head photographs. In the final step the 3D head model is automatically reconstructed. Model can be exported to Collada format for further use in the other applications.

Collada Engine

Collada Engine is package of libraries for loading and rendering digital content saved in collada document files. Libraries support loading geometry, nodes, transformations, textures etc. from .dae files. 3D content is rendered with use of OpenGL. Libraries support also shader loading, compiling and using in rendering. Libraries can be downloaded from this link or from project website.

Libraries are created in Borland Developer Studio and need it for proper function. Basic component needed for 3D model loading is TXMLDocument component from Borland Developer Studio.